2.11. Human-Computer Interface

215. The idea of eliminating the gap between human thought and computer responsiveness is an obvious one, and a number of companies are working hard on promising technologies. One of the most obvious such technologies is voice recognition software that allows the computer to type as you speak, or allows users to control software applications by issuing voice commands.

216. Even the most advanced and accurate software in this category has an accuracy that is impressive, and the technology is far ahead of voice recognition technology from a mere decade ago, but it's still not at the point where people can walk up to their computer and start issuing voice commands without a whole lot of setup, training, and fine tuning of microphones and sound levels. Widespread, intuitive use of voice recognition technology still appears to be years away.

217. And yet our interface with the Internet remains the lowly personal computer. With its clumsy interface devices (keyboard and mouse, primarily), the personal computer is a makeshift bridge between the ideas of human beings and the world of information found on the Internet. These interface devices are clumsy and simply cannot keep pace with the speed of thought of which the human brain is capable.

218. Consider this: a person with an idea who wishes to communicate that idea to others must translate that idea into words, then break those words into individual letters, then direct her fingers to punch physical buttons (the keyboard) corresponding to each of those letters, all in the correct sequence. Not surprisingly, typing speed becomes a major limiting factor here: most people can only type around sixty words per minute. Even a fast typist can barely achieve 120 words per minute. Yet the spoken word approaches 300 words per minute, and the speed of 'thought' is obviously many times faster than that.

219. Pushing thoughts through a computer keyboard is sort of like trying to put out a raging fire with a garden hose: there is simply not enough bandwidth to move things through quickly enough. As a result, today's computer / human interface devices are significant obstacles to breakthroughs in communicative efficiency.

220. The computer mouse is also severely limited. I like to think of the mouse as a clumsy translator of intention: if you look at your computer screen, and you intend to open a folder, you have to move your hand from your keyboard to your mouse, slide the mouse to a new location on your desk, watch the mouse pointer move across the screen in an approximate mirror of the mouse movement on your desk, then click a button twice. Thats a far cry from the idea of simply looking at the icon and intending it to open.

221. Today's interface devices are little more than rudimentary translation tools that allow us to access the world of personal computers and the Internet in a clumsy, inefficient way. Still, the Internet is so valuable that even these clumsy devices grant us immeasurable benefits, but a new generation of computer/human interface devices would greatly multiply those benefits and open up a whole new world of possibilities for exploiting the power of information and knowledge.

2.11.1. Hand Controlled Computers

222. Another recent technology that represents a clever approach to computer / human interfaces is the iGesture Pad by a company called Fingerworks (http://www.FingerWorks.com). With the iGesture Pad, users place their hands on a touch sensitive pad (about the size of a mouse pad), then move their fingers in certain patterns (gestures) that are interpreted as application commands. For example, placing your fingers on the pad in a tight group, then rapidly opening and spreading your fingers are interpreted as an Open command.

223. For more intuitive control of software interfaces, what is needed is a device that tracks eye movements and accurately translates them into mouse movements: so you could just look at an icon on the screen and the mouse would instantly move there.

224. Importance: This technology represents a leap in intuitive interface devices, and it promises a whole new dimension of control versus the one-dimensional mouse click. Keystrokes and mouse clicks limit a soldier's degree of freedom.

225. Status: It's still a somewhat clumsy translation of intention through physical limbs. Interestingly, some of the best technology in this area comes from companies building systems for people with physical disabilities. For people who can't move their limbs, computer control through alternate means is absolutely essential.

2.11.2. Head Moving Tracking Technology

226. One approach to this is tracking the movement of a person's head and translating that into mouse movements. One device, the Head Mouse (Origin Instruments), does exactly that. You stick a reflective dot on your forehead, put the sensor on top of your monitor, and then move your head to move your mouse.

227. Another company called Madentec (http://www.Madentec.com) offers a similar technology called Tracker One. Place a dot on your forehead, and then you can control the mouse simply by moving your head.

228. In terms of affordable head tracking products for widespread use, a company called NaturalPoint (http://www.NaturalPoint.com) seems to have the best head tracking technology at the present: a product called SmartNav. Add a foot switch and you can click with your feet.

229. Importance: Allows for hands-free control via head movement.

230. Status: Current implementations present a learning curve for new users, but it works as promised.

2.11.3. Eye Tracking Movements

231. While tracking head movement is in many ways better than tracking mouse movement, a more intuitive approach, it seems, would be to track actual eye movements. A company called LC Technologies, Inc. is doing precisely that with their EyeGaze systems (http://www.lctinc.com/products.htm). By mounting one or two cameras under your monitor and calibrating the software to your screen dimensions, you can control your mouse by simply looking at the desired position on the screen.

232. This technology was originally developed for people with physical disabilities, yet the potential application of it is far greater. In time, I believe that eye tracking systems will become the preferred method of cursor control for users of personal computers.

233. Eye tracking technology is quickly emerging as a technology with high potential for widespread adoption by the computing public. Companies such as Tobii Technology (http://www.tobii.se), Seeing Machines (http://www.SeeingMachines.com), SensoMotoric Instruments (http://www.smi.de), Arrington Research (http://www.ArringtonResearch.com), and EyeTech Digital Systems (http://www.eyetechds.com) all offer eye tracking technology with potential for computer / human interface applications. The two most promising technologies in this list, in terms of widespread consumer-level use, appear to be Tobii Technology and EyeTech Digital Systems.

234. Importance: Allows for hands-free control via eye movement.

2.11.4. Brain-Computer Interface

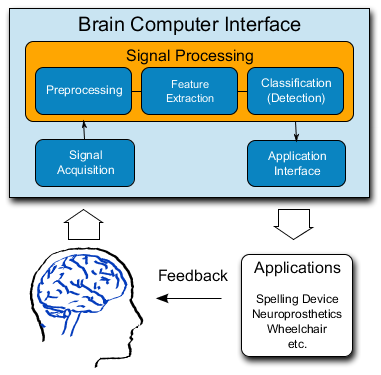

235. Moving to the next level of human-computer interface technology, the ability to control your computer with your thoughts alone seems to be an obvious goal. The technology is called Brain Computer Interface technology, or BCI.

236. Although the idea of brain-controlled computers has been around for a while, it received a spike of popularity in 2004 with the announcement that nerve-sensing circuitry was implanted in a monkey's brain, allowing it to control a robotic arm by merely thinking. Brain activity produces electrical signals that are detectable on the scalp or cortical surface or within the brain. BCIs translate these signals from mere reflections of brain activity into outputs that communicate the user’s intent without the participation of peripheral nerves and muscles. BCIs can be non-invasive or invasive. Non-invasive BCIs derive the user’s intent from scalp-recorded electroencephalographic (EED) activity. While invasive BCIs derive the user’s intent from neuronal action potentials or local field potentials recorded from within the cerebral cortex or from its surface. Researchers have studied these systems mainly in nonhuman primates and to a limited extent in humans. Invasive BCIs face substantial technical difficulties and involve clinical risks. Surgeons must implant the recording electrodes in or on the cortex. The devices must function well for long periods and they risk infection and may pose other damage to the brain.

237. Importance: Imagine the limitless applications of direct brain control. People could easily manipulate cursors on the screen or control electromechanical devices. They could direct software applications, enter text on virtual keyboards, or even drive vehicles on public roads. Today, all these tasks are accomplished by our brains moving our limbs, but the limbs, technically speaking, don't have to be part of the chain of command.

238. Status: The lead researchers in the monkey experiment are now involved in a commercial venture to develop the technology for use in humans. The company, Cyberkinetics Inc. hopes to someday implant circuits in the brains of disabled humans and then allow those people to control robotic arms, wheelchairs, computers or other devices through nothing more than brain behaviour.

2.11.5. Tactile Feedback

239. Another promising area of human-computer interface technology is being explored by companies like Immersion Corporation (http://www.Immersion.com), which offers tactile feedback hardware that allows users to 'feel' their computer interfaces.

240. Slide on Immersion's CyberGlove, and your computer can track and translate detailed hand and finger movements. Add their CyberTouch accessory, and tiny force feedback generators mounted on the glove deliver the sensation of touch or vibration to your fingers. With proper software translation, these technologies give users the ability to manipulate virtual objects using their hands. It's an intuitive way to manipulate objects in virtual space, since nearly all humans have the natural ability to perform complex hand movements with practically no training whatsoever.

241. Another company exploring the world of tactile feedback technologies is SensAble Technologies. Their PHANTOM devices allow users to construct and feel three-dimensional objects in virtual space. Their consumer-level products include a utility for gamers that translate computer game events into tactile feedback (vibrations, hitting objects, gun recoil, etc.).

242. On a consumer level, Logitech makes a device called the IFeel Mouse that vibrates or thumps when your mouse cursor passes over certain on-screen features. Clickable icons, for example, feel like bumps as you mouse over them. The edges of windows can also deliver subtle feedback.

243. Importance: Key technology for modeling & simulation, and simulated training. Tactile feedback has potential for making human-computer interfaces more intuitive and efficient;even if today's tactile technologies are clunky first attempts. The more senses we can directly involve in our control of computers, the broader the bandwidth of information and intention between human beings and machines.

244. Status: Hasn't seen much success in the marketplace.

2.11.6. Widget Framework for Desktop and Portable Devices

245. Widgets are software with small footprint designed to pull in a particular kind of information (often from multiple sources). An emerging standard that supports such combination of data from multiple sources is Enterprise Mashup Markup Language (EMML) 1.0 (www.openmashup.org).

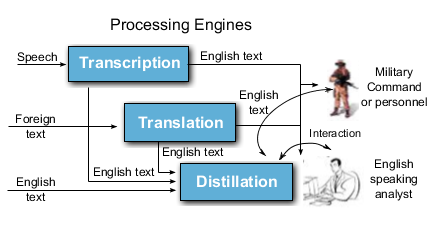

2.11.7. Automated Language Processing

246. Foreign language speech and text are indispensable sources of intelligence, but the vast majority of this information is unexamined. Foreign language data and their corresponding providers are massive and growing in numbers daily. Moreover, because the time to transcribe and translate foreign documents is so labor intensive, compounded by the lack of linguists with suitable language skills to review it all, much foreign language speech and text are never exploited for intelligence and counterterrorism purposes. New and powerful foreign language technology is needed to allow English-speaking analysts to exploit and understand vastly more foreign speech and text than is currently possible today.

2.11.7.1. Speech-to-Text Transcription

247. Automatic speech-to-text transcription seeks to produce rich, readable transcripts of foreign news broadcasts and conversations (over noisy channels and/or in noisy environments) despite widely-varying pronunciations, speaking styles, and subject matter. Goals for speech-to-text transcription include: (1) providing high accuracy multilingual word-level transcription from speech at all stages of processing and across multiple genres, topics, speakers, and channels ( such as, Arabic, Chinese, the Web, news, blogs, signals intelligence, and databases); (2) representing and extracting “meaning” out of spoken language by reconciling and resolving jargon, slang, code-speak, and language ambiguities; (3) dynamically adapting to (noisy) acoustics, speakers, topics, new names, speaking-styles, and dialects; (4) improving relevance to deliver the information decision-makers need; (5) assimilating and integrating speech across multiple sources to support exploration and analysis to enable natural queries and drill-down; and (6) increased portability across languages, sources, and information needs.

248. Importance: Examples of critical technologies include: improved acoustic modeling; robust feature extraction; better discriminative estimation models; improved language and pronunciation modeling; and language independent approaches that are able to learn from examples by using algorithms that exploit advances in computational power plus the large quantities of electronic speech and text that are now available. The ultimate goal is to create rapid, robust technology that cab be ported cheaply and easily to other languages and domains.

2.11.7.2. Foreign-to-English Translation

249. Goals for foreign to English translation include: (1) providing high accuracy machine translation and structural metadata annotation from multilingual text document and speech transcription input at all stages of processing and across multiple genres, topics, and mediums ( such as, Arabic, Chinese, the Web, news, blogs, signals intelligence, and databases); (2) understanding or at least deriving semantic intent from input strings regardless of source; (3) reconciling and resolving semantic differences, duplications, inconsistencies, and ambiguities across words, passages, and documents; (4) more efficient discovery of important documents, more relevant and accurate facts while decreasing the amount of time required to do it, and passages for distillation; (5) providing enriched translation output that is formatted, cleaned-up, clear, unambiguous, and meaningful to decision-makers; (6) eliminating the need for human intervention and minimized delay of information delivery; and (7) fast development of new language capability, swift response to breaking events, and increased portability across languages, sources, and information needs.

250. Importance: Examples of critical technologies include: improved dynamic language modeling with adaptive learning; advanced machine translation technology that utilizes heterogeneous knowledge sources; better inference models; language-independent approaches to create rapid robust technology that can be ported cheaply and easily to any language and domain; syntactic and semantic representation techniques to deal with ambiguous meaning and information overload; and cross- and mono- lingual, language-independent information retrieval to detect and discover the exact data in any language quickly and accurately, and to flag new data that may be of interest.