2.4. Cloud Computing

93. The "Cloud" in Cloud computing describes a combination of inter-connected networks. This technology area focuses on breaking up a task into sub-tasks. The sub-tasks are then executed simultaneously on two or more computers that are communicating with each other over the “Cloud”. Later, the completed sub-tasks are reassembled to form the completed original main task. There are many different types of distributed computing systems and many issues to overcome in successfully designing one. Many of these issues deal with accommodating a wide-ranging mix of computer platforms with assorted capabilities that could potentially be networked together

94. Implications : The main goal of a cloud computing system is to connect users and resources in a transparent, open, and scalable way. Ideally this arrangement is drastically more fault tolerant and more powerful than many combinations of stand-alone computer systems. If practiced in a NATO environment, member nations would consume their IT services in the most cost-effective way, over a broad range of services (for example, computational power, storage and business applications) from the "cloud," rather than from on-premises equipment.

2.4.1. Platforms

95. Cloud-computing platforms have given many businesses flexible access to computing resources, ushering in an era in which, among other things, startups can operate with much lower infrastructure costs. Instead of having to buy or rent hardware, users can pay for only the processing power that they actually use and are free to use more or less as their needs change. However, relying on cloud computing comes with drawbacks, including privacy, security, and reliability concerns.

2.4.1.1. Amazon's Elastic Compute Cloud (EC2)

96. Amazon Elastic Compute Cloud (Amazon EC2) is a web service that provides resizable compute capacity in the cloud. It is designed to make web-scale computing easier for developers. If your application needs the processing power of 100 CPUs then you scale up your demand. Conversely, if your application is idle then you scale down then amount of computing resources that you allocate.

97. Status :The first company to successfully levearge the excess computing resources from its primary bussiness. Currently, CPU cycles and storage are at pennies per hour or GB.

2.4.1.2. Microsoft's Azure Services Platform

98. Windows® Azure is a cloud services operating system that serves as the development, service hosting and service management environment for the Azure Services Platform. Windows Azure provides developers with on-demand compute and storage to host, scale, and manage Web applications on the Internet through Microsoft® data centers.

99. Status : During the Community Technology Preview (CTP) developers invited to the program, which includes all attendees of the Microsoft Professional Developers Conference 2008 (PDC), receive free trial access to the Azure Services Platform SDK, a set of cloud-optimized modular components including Windows Azure and .NET Services, as well as the ability to host their finished application or service in Microsoft datacenters.

2.4.1.3. Google App Engine

100. Google App Engine enables you to build web applications on the same scalable systems that power Google applications. App Engine applications are easy to build, easy to maintain, and easy to scale as your traffic and data storage needs grow. With App Engine, there are no servers to maintain: You just upload your application, and it's ready to serve to your users. Google App Engine applications are implemented using the Python programming language.

101. Status : Currently in the preview release stage. You can use up to 500MB of persistent storage and enough CPU and bandwidth for about 5 million page views a month for free. Later on if you want additional computing resources, you will have to purchase it.

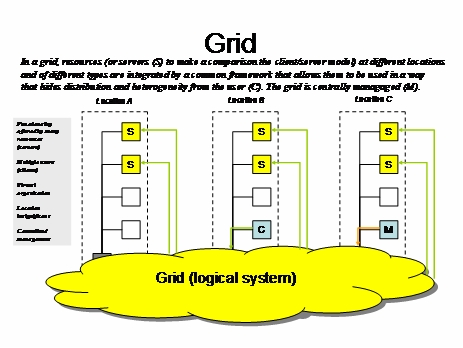

2.4.2. Grid Computing

102. Grid computing is the collective name of technologies that attempt to combine a set of distributed nodes into a common resource pool. The term grid computing stems from an analogy with electric power grids that combine a large set of resources (power plants, transformers, power lines) into simple well defined product (the power outlet) that the user can use without having to know the details of the infrastructure behind it.

103. Typical for grid computing is also that it offers a framework for management of node memberships, access control, and life cycle management. Common applications of grid computing allow well defined organisations to share computational resources or storage resources so that resources may be used efficiently and resource demanding tasks can be performed that would otherwise have required large specialised and/or local investments.

Figure 2.4. Grid Computing

104. While some grid computing systems have been designed for a specific purpose, some standards for Grid infrastructure have also evolved that make the task of setting up a Grid easier and provide a common interface to application developers. Such standardisation efforts have resulted in Globus Toolkit [51] and OGSA (Open Grid Services Architecture) [53].

105. The Global Grid Forum (GGF) [52] is a set of working groups where much work on grid computing standardisation is ongoing, and that also is behind the OGSA standard. Their web site is a good source of information about ongoing work in the field and examples of grid projects.

2.4.2.1. Globus Toolkit

106. Globus Toolkit [51] offers tools and APIs for building secure grid infrastructures. It offers a secure infrastructure (GSI) on top of which support for resource management, information services, and data management is provided. It is being developed by Globus Alliance [50], and is among other applications being used as a foundation for implementation of OGSA.

2.4.2.2. OGSA

107. OGSA [53] (developed by the Global Grid Forum, GGF [52]) builds on a combination of Globus Toolkit [51] (developed by the Globus Alliance [50]) and Web service technologies to model and encapsulate resources in the Grid. A number of special Web service interfaces are defined by OGSA that support service management, dynamic service creation, message notification, and service registration. A number of implementations of OGSA have been made, including for example OGSI (Open Grid Services Infrastructure) and WSRF (Web Services Resource Framework, developed by OASIS [47]). Today, WSRF is the chosen basis for further development of OGSA

2.4.2.3. OSGi

108. OSGi is a standard for a framework for remotely manageable networked devices [25]. Applications can be securely provisioned to a network node running an OSGi framework during runtime and thus provides the possibility to dynamically manage the services and functionality of the network node. The OSGi model is based upon a service component architecture, where all functionality in the node is provided as "small" service components (called bundles). All services are context aware of each other and heavily adapt their functionality depending on what other services are available. Services must degrade gracefully when the services they depend upon are not available in the framework. These network nodes are designed to reliably provide their services 24/7/365. Adding an OSGi Service Platform to a networked node/device adds the capability to manage the life cycle of the software components in the device. Adding new services thus makes it more future proof and gives the node flexibility.

109. OSGi adopts a SOA based approach where all bundles deployed into an OSGi framework are described using well defined service interfaces. The bundle uses the framework's name service for publishing offered services and to retrieve services needed by the bundle itself. OSGi works well together with other standards and initiatives for example Jini or Web Services. OSGi is also an example of a technology where integration is carried out in the Java environment but the actual implementation of the services can be written in other languages, such as C.

2.4.3. Decentralised Computing

110. Another trend in distributed system is to decrease the dependence on centralised resources. A centralised resource is typically some service of function implemented by dedicated nodes in the distributed system that many of or all other nodes depend on to perform their own services or functions. Examples of such services are shared databases, network routing and configuration functions, and name or catalogue services. Obviously, this may cause dependability problems as the centralised server becomes a potential single point of failure (). From some points of view, decentralisation (i.e To decrease the dependence single nodes), is therefore a property to strive for. However, decentralisation may also result in a (sometimes perceived) loss of control that fits poorly with traditional ways of thinking of, for example, security. Decentralisation can thus have a rather long-reaching impact on the way distributed systems are designed.

111. Taken to its extreme, decentralisation strives for distributed systems where all nodes are considered equal in all respects (except of course their performance and capacity), i.e., all nodes are considered to peers. This is the foundation to peer-to-peer (P2P) systems, which will be discussed later. In such systems, decentralisation is realised by offering mechanisms to maintain a global shared state that is not stored by any single node.

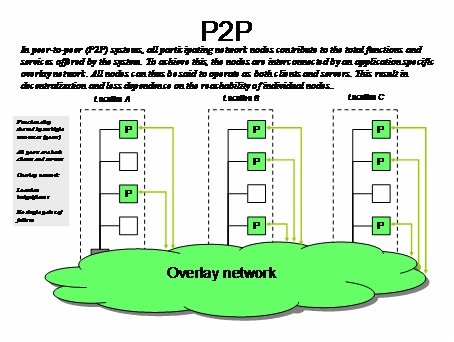

2.4.3.1. Peer-to-Peer (P2P)

112. Peer-to-peer, or P2P for short, is a technology trend that has received much attention in recent years. Unfortunately, much of this attention has been oriented towards questionable applications of P2P, such as illegal file sharing, rather than towards its merits as a technology that addresses important problems with traditional distributed systems.

113. The fundamental idea behind P2P is, as is implied by its name, that all participating nodes in a distributed system shall be treated as peers, or equals. This is a clear deviation from the client/server concept, where some nodes play a central role as servers and other nodes play a role as clients. In those systems, the server nodes become critical for the system function, and thus need to be dependable, highly accessible, and dimensioned to handle a sometimes unpredictable workload. Obviously this may make a server node both a performance bottleneck and a dependability risk. P2P addresses such problems.

Figure 2.5. Peer-To-Peer

114. The fundamental important property of many P2P applications is that they allow storage of named objects, such as files, services, or database entries, to be spread over all nodes in a system instead of having to store and access them on centralised server nodes. Object names can be numbers or character strings; whichever is appropriate for the applications. Once stored in the system, any node can access a named object just by knowing its name. This means that a P2P system can have a global shared state that does not depend on any centralised node.

115. While in principle avoiding a centralised solution, many early P2P systems, such as the Napster file sharing system actually relied on some central functions in order to implement the mapping from object names to object locations. However, in later generations of P2P decentralisation has been taken another step further, by the introduction of overlay networks, earlier in this report mentioned as a form of virtual networks.

116. With an overlay network, a distributed means of finding the node that is responsible for storing an object with a given name is provided. Typically the set of all possible object names, called the name space, is divided into subsets such that each node becomes responsible for one subset of the name space. This means that the need for centralised resources is completely avoided. For a node to become part of a P2P system, i.e. To join the overlay network, all that is needed is knowledge of any other node already part of the system. As nodes join and leave the system, the responsibility for name space subsets is automatically redistributed.

117. The development of overlay network based P2P systems has been driven by a striving to improve scalability, guarantees, and robustness. Today, a logarithmic logical scalability can be achieved, which means that to find a given node from any other node in the system requires at most logk(N) node-to-node messages to be sent, where k is some constant and N is the maximum number of nodes in the system. Note, however, that this scalability measure does not automatically translate into an exact measure of the actual time it takes to send a message between two nodes as this time will depend also on the distribution of nodes on the underlying physical network.

118. The scalability issue has been addressed by a number of proposed P2P implementation techniques developed within academic research projects such as Chord [40], Pastry [41], and Tapestry [42]. However, scalability has been taken yet another step further by the DKS system developed at the Swedish Institute of Computer Science (SICS. In DKS, the amount of administrative traffic required to keep track of nodes in the overlay network is significantly reduced, and dimensioning parameters of the system can be fine-tuned in a very general framework (that actually treats the other mentioned techniques as special cases) so as to improve the practical scalability.

119. Another important aspect of P2P systems is also the guarantees that can be given that any named object stored in the systems also can found. Such guarantees have improved over the P2P technology generations, and 100% guarantees can now be given by the DKS system assuming that there are no errors such as physical communication failures that make nodes unreachable. As intermittent or lasting communication failures must be expected in any practical system, techniques for improving the fault tolerance by means of replication of data have been investigated and are now being included into the general frameworks of P2P technologies.

120. However, practical applications of new P2P technologies like DKS in distributed systems need to be investigated in order to determine how well scalability and robustness functions in practise given the impact of underlying infrastructure and application properties. An example of an ongoing such project investigates the properties of a DKS based implementation of central service in the OpenSIS standard [49].

121. To summarise, P2P technologies have matured into a practical way of organising distributed systems in a decentralised way that is both highly scalable and reliable. Excellent reviews and descriptions of P2P technologies can be found in [16], [19], and [20].

122. Status : Currently the advantages of P2P technologies (pooling computing power, pooling bandwidth of the participants, and elimination of the server/client distinction) don't outweigh the disadvantages (legal obstacles, and security vulnerabilities)

2.4.4. Service Directory Platform (SDP)

123. Service Delivery Platform (SDP) is an architecture that enables the quick development, deployment and integration (convergence) of broadband, video, wireless and wire-line services can cater to many technologies including VoIP, IPTV, HSD (High Speed Data), Mobile Telephony, Online Gaming, etc.

124. Examples of a converged architecture:

-

A user can see incoming phone calls (Wire-line or Wireless), IM buddies (PC) or the location of friends (GPS Enabled Device) on their television screen.

-

A user can order VoD (Video on Demand) services from their mobile phone or watch streaming video that they have ordered as a video package for both home and mobile phone.

125. SDPs in the converged services world need to be designed and engineered using information and identity engineering techniques as a core discipline because a is supporting online users their services. A needs to be a real time multi function system that interfaces to the back office systems for billing and to the network infrastructure systems. From an information engineering perspective there could be 20-100 information items associated with a user, their devices and their content and if there are 10 million users on the system, it means that the needs scale up to associate with a billion pieces of information used in random ways. SDPs also need to address the issues of converged services management, account control, self care, and entitlements as well as presence based event type services. Service Delivery Platform, identity engineering and white papers on presence based services can be found at *www.wwite.com.

2.4.4.1. Lightweight Directory Access Protocol v.3 (LDAP)

126. The following is a partial list of RFCs specifying LDAPv3 extensions:

| RFC | Description |

| RFC 2247 | Use of DNS domains in distinguished names |

| RFC 2307 | Using LDAP as a Network Information Service |

| RFC 2589 | LDAPv3: Dynamic Directory Services Extensions |

| RFC 2649 | LDAPv3 Operational Signatures |

| RFC 2696 | LDAP Simple Paged Result Control |

| RFC 2798 | inetOrgPerson LDAP Object Class |

| RFC 2849 | The LDAP Data Interchange Format (LDIF) |

| RFC 2891 | Server Side Sorting of Search Results |

| RFC 3045 | Storing Vendor Information in the LDAP root DSE |

| RFC 3062 | LDAP Password Modify Extended Operation |

| RFC 3296 | Named Subordinate References in LDAP Directories |

| RFC 3671 | Collective Attributes in LDAP |

| RFC 3672 | Subentries in LDAP |

| RFC 3673 | LDAPv3: All Operational Attributes |

| RFC 3687 | LDAP Component Matching Rules |

| RFC 3698 | LDAP: Additional Matching Rules |

| RFC 3829 | LDAP Authorisation Identity Controls |

| RFC 3866 | Language Tags and Ranges in LDAP |

| RFC 3909 | LDAP Cancel Operation |

| RFC 3928 | LDAP Client Update Protocol |

| RFC 4370 | LDAP Proxied Authorisation Control |

| RFC 4373 | LBURP |

| RFC 4403 | LDAP Schema for UDDI |

| RFC 4522 | LDAP: Binary Encoding Option |

| RFC 4523 | LDAP: X.509 Certificate Schema |

| RFC 4524 | LDAP: COSINE Schema (replaces RFC 1274) |

| RFC 4525 | LDAP: Modify-Increment Extension |

| RFC 4526 | LDAP: Absolute True and False Filters |

| RFC 4527 | LDAP: Read Entry Controls |

| RFC 4528 | LDAP: Assertion Control |

| RFC 4529 | LDAP: Requesting Attributes by Object Class |

| RFC 4530 | LDAP: entryUUID |

| RFC 4531 | LDAP Turn Operation |

| RFC 4532 | LDAP Who am I? Operation |

| RFC 4533 | LDAP Content Sync Operation |

Table 2.5. Summary of LDAP Related RFCs

2.4.4.2. Composite Adaptive Directory Services

127. Data bases have been with the IT industry for 30 years and traditional directories for the last 20 years and they will be with us in the future. However, with the larger scale, converged services and event driven (presence) systems now being developed world wide (e.g. 3G-IMS), information, identity and presence services engineering and the technologies that support it will require some evolution. This could take the form of CADS (Composite Adaptive Directory Services) and CADS supported Service Delivery Platforms. CADS is an advanced directory service and contains functions for managing identity, presence, content and adaptation algorithms for self tuning and with its unique functions, greatly simplifies and enhances the design of converged services SDPs. See Service Delivery Platform

2.4.5. Networked Computing

128. Networked computing is a set of technologies that treats the network as a computing platform, enables machine to machine communication, and offer efficient new ways to help networked computers organise and draw conclusions from online data.

129. Implications : Reusable, repurposable, and reconnectable data/services will promote the convergence of Service Oriented Architecture and the Semantic Web.

2.4.5.1. Web Services

130. Web Services are software systems that enable machine to machine interaction over a network. The Web Services present themselves as web based Application Programming Interfaces (API) that use XML messages to communicate. No one standard defines Web Services, but is comprised of four core standards:

-

Extensible Markup Language (XML) - A simple platform independent language used to facilitate communication between different computing systems. (W3C)

-

Service Object Access Protocol (SOAP) 1.2 - A protocol for exchanging XML based messages over networks using HTTP, HTTPS, SMTP, or XAMPP protocols. (W3C)

-

Web Services Description language (WSDL) 2.0 - XML document format that describes interfaces and protocols need to connect to a Web Service. (W3C)

-

Universal Discovery, Description, and Integration (UDDI) - XML based registry that can be queried by SOAP messages, and return WSDL documents so that Web Services can be found and connected to. (OASIS)

131. There are several efforts to extend the capabilities of Web Services.

-

WS-Security - Defines how to use XML Encryption and XML Signature in SOAP to secure message exchanges, as an alternative or extension to using HTTPS to secure the channel.

-

WS-Reliability - An OASIS standard protocol for reliable messaging between two Web services.

-

WS-ReliableMessaging - A protocol for reliable messaging between two Web services, issued by Microsoft, BEA and IBM it is currently being standardised by the OASIS organisation

-

WS-Addressing - A way of describing the address of the recipient (and sender) of a message, inside the SOAP message itself.

-

WS-Transaction - A way of handling transactions.

2.4.5.1.1. Web Service Specifications

| Technology | Description |

| WS-BPEL | Process composition |

| WSCI | Process composition |

| WSDM | Management and infrastructure |

| JBI | Process composition |

| SCA | Process composition |

| Java-EE | Management and infrastructure |

| Java-RMI | Distributed systems |

| .NET | Management and infrastructure |

| Jini | Distributed systems, Service selection, Management and infrastructure |

| Rio | Distributed systems, Service selection, Process composition, Management and infrastructure |

Table 2.6. Summary of Emerging Web Services Technologies

2.4.5.1.1.1. Business Process Execution Language for Web Services (WS-BPEL or BPEL4WS)

132. The Business Process Execution Language (BPEL) originally proposed by Microsoft, and Siebel Systems. It is XML-based and is designed as a layer on top of WSDL that allows a standardised way of describing business process flows in terms of web services. A BPEL process can be used either to describe an executable work flow in a node (orchestration), or to describe the protocol for interaction between participants in a business process (choreography). Status : As of January 2007, BPEL4WS is now an OASIS standard also referred to as WS-BPEL version 2.0. [23].

2.4.5.1.1.2. Web Service Choreography Interface (WSCI)

133. Other Web service based standards for Web service orchestration and choreography are , developed by Sun, , BEA, and Intalio, and BPML, initiated by Intalio, Sterling Commerce, Sun, and through the non-profit (Business Process Management Initiative) corporation. (Web Services Choreography Interface) extends WSDL (rather than being built on top of WSDL like BPEL) and focuses on the collaborative behaviour of either a service user or a service provider. Status : The WSCI specification is one of the primary inputs into the W3C's Web Services Choreography Working Group which published a Candidate Recommendation on WS-DSL version 1.0 on November 2005 to replace WSCI.

2.4.5.1.1.3. Web Service Distributed Management (WSDM)

134. Web Services Distributed Management (WSDM) [70] is an OASIS [45] standard that specifies infrastructure support for the integration of web service management aspects across heterogeneous systems. This is done through management specific messaging via web services. It consists of main parts:

-

WSDM-MUWS. Deals with Management Using Web Services (MUWS), i.e., the fundamental capabilities required to manage a resource.

-

WSDM-MOWS. Deals with Management of Web Services (MOWS), and builds on MUWS to specify how web services (as a kind of resource) are managed.

2.4.5.1.1.4. Java Business Integration (JBI)

135. Java Business Integration (JBI) is a Java Specification Request (JSR 208) [72] that aims to extend Java (including Java EE) with an integration environment for business process specifications like , BPEL4WS and those proposed by the W3C Choreography Working Group [71]. It is an example of a technology that implements Enterprise Service Bus (ESB) concepts.

2.4.5.1.1.5. Java Remote Method Invocation (JRMI)

136. Java Remote Method Invocation (JRMI), is a Java specific standard for access to Java objects across a distributed system [39].

2.4.5.1.1.6. Jini

137. Jini network technology [29] [30] is an open architecture that enables developers to create network-centric services that are highly adaptive to change. An architecture based on the idea of a federation rather than central control, Jini technology can be used to build adaptive networks that are scalable, evolvable and flexible as typically required in dynamic computing environments. Jini technology provides a flexible infrastructure for delivering services in a network and for creating spontaneous interactions between clients that use these services regardless of their hardware or software implementations.

138. The Jini architecture specifies a discovery mechanism used by clients and services to find each other on the network and to work together to get a task accomplished. Service providers supply clients with portable Java technology based objects that implements the service and gives the client access to the service. The actual network interaction used can be of any type such as Java [39], CORBA [38], or [54], because the object encapsulates (hides) the communication so that the client only sees the Java object provided by the service.

139. The Rio Project [31] extends the Jini technology [29] [30] to provide dynamic adaptive network architecture and uses a nomadic SOA approach. In a nomadic SOA services can migrate and self optimise their architectural structure to respond to the changing service environment.

140. A fundamental tenet of distributed systems is they must be crafted with the reality that changes occur on the network. Compute resources have assets diminished or fail and new ones are introduced into the network. Applications executing on compute resources may fail, or suffer performance degradation based on diminishing compute resource capabilities and/or assets. Technology used must provide distributed, self-organizing, network-centric capabilities. Enables a dynamic, distributed architecture capable of adapting to unforeseen changes on the network.

141. Importance : This architecture can facilitate the construction of distributed systems in the form of modular co-operating services.

142. Implications : Can provide a more stable, fault-tolerant, scalable, dynamic, and flexible solution. Jini also provide the ability to do a better job at upgrading systems, keeping everything running including old clients.

143. Status : Originally developed and maintained by Sun Microsystems, but now that responsibility is being transferred to the Apache Software Foundation under the project name of River. There are many initiatives that are based on the Jini technology such as various grid architectures and [31].

2.4.5.1.2. Web Services Profiles

144. The Web Services Interoperability organisation (WS-I) is a global industry organisation that promotes consistent and reliable interoperability among Web services across platforms, applications and programming languages. They are providing Profiles (implementation guidelines), Sample Applications (web services demonstrations), and Tools (to monitor Interoperability). The forward looking WS-I is enhancing the current Basic Profile and providing guidance for interoperable asynchronous and reliable messaging. WS-I's profiles will be critical for making Web services interoperability a practical reality.

145. The first charter, a revision to the existing WS-I Basic Profile Working Group charter, resulted in the development of the Basic Profile 1.2 and the future development of the Basic Profile 2.0. The Basic Profile 1.2 will incorporate asynchronous messaging and will also consider SOAP 1.1 with Message Transmission Optimisation Mechanism (MTOM) and XML-binary optimised Packaging (XOP). The Basic Profile 2.0 will build on the Basic Profile 1.2 and will be based on SOAP 1.2 with MTOM and XOP. The second charter establishes a new working group, the Reliable Secure Profile Working Group, which will deliver guidance to Web services architects and developers concerning reliable messaging with security.

146. Status : In 2006, work began on Basic Profile 2.0 and the Reliable Secure Profile 1.0. In 2007 the Basic Profile 1.2, the Basic Security Profile 1.0 was approved. More information about WS-I can be found at www.ws-i.org.

2.4.5.1.3. Web Service Frameworks

147. A list of Frameworks:

| Name | Platform | Destination | Specification | Protocols |

| Apache Axis | Java/C++ | Client/Server | WS-ReliableMessaging, WS-Coordination, WS-Security, WS-AtomicTransaction, WS-Addressing | SOAP, WSDL |

| JSON RPC Java | Java | Server | - | JSON-RPC |

| Java Web | Java | Client/Server | WS-Addressing, WS-Security | SOAP |

| Services Development Pack | Java | Client/Server | - | WSDL |

| Web Services Interoperability Technology | Java | Client/Server | WS-Addressing, WS-ReliableMessaging, WS-Coordination, WS-AtomicTransaction, WS-Security, WS-Security Policy, WS-Trust, WS-SecureConversation, WS-Policy, WS-MetadataExchange | SOAP, WSDL, MTOM |

| Web Services Invocation Framework | Java | Client | - | SOAP, WSDL |

| Windows Communication Foundation | .Net | Client/Server | WS-Addressing, WS-ReliableMessaging, WS-Security | SOAP, WSDL |

| XFire | Java | Client/Server | WS-Addressing, WS-Security | SOAP, WSDL |

| XML Interface for Network Services | Java | Server | - | SOAP, WSDL |

| gSOAP | C/C++ | Client/Server | WS-Addressing, WS-Discovery, WS-Enumeration, WS-Security | SOAP, XML-RPC, WSDL |

| NuSOAP | PHP | Server | - | SOAP, WSDL |

2.4.5.1.4. Web Services Platforms

2.4.5.1.4.1. Java 2 Edition (J2EE)

148. Java Enterprise Edition (formerly known as Java 2 Platform, Enterprise Edition or J2EE up to version 1.4) [35] is a specification of a platform and a code library that is part of the Java Platform. It is used mainly for developing and running distributed multi-tier Java applications, based largely on modular software components running on a platform (application server). The platform provides the application with support for handling of transactions, security, scalability, concurrency and management of deployed applications. Applications are easily ported between different Java EE application servers. Applications developed for the Java EE can easily support Web Services. Java EE is often compared to Microsoft .Net [36], a comparison that is beyond the scope of this document. But it shall be noted that .Net is a product and framework closely related to development for the Windows operating system whereas Java EE is a specification that is followed by many product vendors.

2.4.5.1.4.2. Microsoft .NET

149. Microsoft .NET framework [36] is a Microsoft product closely related to application development for the Windows platform [36]. The framework includes the closely related C# programming language (even if it is a formal ISO standard) and the Common Language Runtime (CLR), which simplified is Microsoft's response to JAVA. Programs written for the NET. Framework execute in which provides the appearance of an application virtual machine, so that programmers need not consider the capabilities of the specific CPU that will execute the program. The framework also includes libraries for development of web service applications.

2.4.5.2. Semantic Web

150. The Semantic Web initiative will make searches more accurate and enable increasingly sophisticated information services like intelligent agents that can find products and services, schedule appointments and make purchases. The initiative includes a sort of grammar and vocabulary that provide information about a document's components; this information will enable Web software to act on the meaning of Web content. Semantic Web software and Web services promise to shift the nature of the Web from a publishing and communications medium to an information management environment.

151. Semantic Web software includes a special set of Extensible Mark-up Language (XML) tags that includes Uniform Resource Identifiers (URL), a Resource Description Framework (RDF), and a Web Ontology Language (OWL).

152. Semantic Web software makes it possible for an intelligent agent to carry out the request "show me the Unmanned Aerial Vehicles (UAV) operating in the current area of responsibility" even if there is no explicit list, because it knows that "area of responsibility" has the property "location" with the value "Kandahar", and in searching a directory of UAVs it knows to skip UAVs belonging to the United States, whose location value is "Kabul", but include German UAVs, whose location value is "Kandahar".

153. Semantic Web software organises Web information so that search engines and intelligent agents can understand properties and relationships. A university, for example, could be defined as an institution of higher education, which is a class of objects that has a set of properties like a population of students.

154. The World Wide Web Consortium released standards in February 2004 that define the two foundation elements of the Semantic Web initiative: the Resource Description Framework (RDF), which provides a structure for interpreting information, and the Web Ontology Language (OWL), which provides a means for defining the properties and relationships of objects in Web information.

155. Web services provide a way for software to communicate with each other over the Web, enabling a form of distributed computing in which simple services can be combined to carry out complicated tasks like financial transactions.

156. Our most fundamental sensors are our senses themselves. They are quite sophisticated, the product of a complex evolutionary design. And yet we've improved on them as time has passed: telescopes and microscopes extend our eyes, thermometers extend our touch, and satellite dishes extend our ears. In addition, virtual sensors have been used for many years to monitor our computing environments. Smaller and more powerful computer processors allow sensors to be small and inexpensive enough that they can be everywhere. And with the advent of trillions and trillions of IPv6 addresses, they can all be networked.

157. Networks, human or technological, are designed to communicate information from one point to others. But the value of a network is entirely dependent on the information it contains. And so the sensors that feed information into a network play a crucial role in maximising a network's value. Simply put, the better the sensors and the better the information they provide, the more valuable the network becomes.

158. Advanced sensors that report directly about their physical environment will enable truly revolutionary applications. Already there are sensors that can detect, measure, and transmit any physical quality such as temperature, pressure, colour, shape, sound, motion, and chemical composition. And, as sensors are becoming more sophisticated, they are also shrinking in size. Some are so tiny that they are difficult to detect.

159. Emerging sensor network technologies will be autonomous, intelligent, and mobile. These sensors will reconfigure themselves to achieve certain tasks. These requirements will provide rigid requirements for the computing, and delivery systems of the future. The networks and computing systems must reorganise themselves to serve the distributed agents with needs to communicate and exchange decisions, actions, and knowledge to other agents in secured environment.

2.4.5.3. Service Component Architecture

160. Service component architecture (SCA) [73] is a technology development initiative driven by a group of middle-ware vendors. SCA extends and complements existing standards for service implementation and for web services; the goal is to simplify application development and implementation of SOA.

161. SCA components operate at a business level and are decoupled from the actual implementation technology and infrastructure used. SCA uses Service Data Objects (SDO) [74] to represent the business data that forms parameters and return value between the services. SCA thus provides uniform Service access and uniform Service data representation.

162. Status : The current SCA specifications are published in a draft version (0.9) at the vendor's web sites [73].